Skip to main content

Node Details

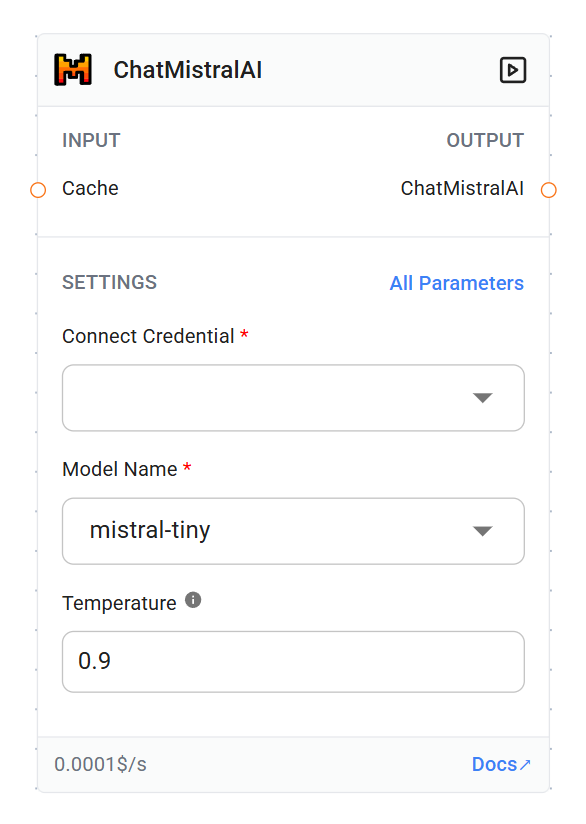

- Name: chatMistralAI

- Type: ChatMistralAI

- Version: 3.0

- Category: Chat Models

- Name: chatMistralAI

- Type: ChatMistralAI

- Version: 3.0

- Category: Chat Models

Base Classes

- ChatMistralAI

- [Other base classes from ChatMistralAI]

Parameters

Credential

- Label: Connect Credential

- Name: credential

- Type: credential

- Credential Names: mistralAIApi

Credential

- Label: Connect Credential

- Name: credential

- Type: credential

- Credential Names: mistralAIApi

-

Cache (optional)

-

Cache (optional)

- Type: BaseCache

- Description: Caching mechanism for the model

-

Model Name

-

Model Name

- Type: asyncOptions

- Default: “mistral-tiny”

- Description: The name of the Mistral AI model to use

-

Temperature (optional)

-

Temperature (optional)

- Type: number

- Default: 0.9

- Range: 0.0 to 1.0

- Description: Controls the randomness of the output. Higher values increase randomness, lower values make it more focused

- Description: Controls the randomness of the output. Higher values increase randomness, lower values make it more focused

-

Max Output Tokens (optional)

-

Max Output Tokens (optional)

- Type: number

- Description: The maximum number of tokens to generate in the completion

-

Top Probability (optional)

-

Top Probability (optional)

- Type: number

- Description: Nucleus sampling parameter. Only tokens comprising the top X% probability mass are considered

- Description: Nucleus sampling parameter. Only tokens comprising the top X% probability mass are considered

-

Random Seed (optional)

-

Random Seed (optional)

- Type: number

- Description: Seed for random sampling. If set, generates deterministic results across different calls

- Description: Seed for random sampling. If set, generates deterministic results across different calls

-

Safe Mode (optional)

-

Safe Mode (optional)

- Type: boolean

- Description: Whether to inject a safety prompt before all conversations

-

Override Endpoint (optional)

-

Override Endpoint (optional)

- Type: string

- Description: Custom endpoint URL for the Mistral AI API

Functionality

The node initializes a ChatMistralAI instance with the provided configuration. It handles parameter parsing and setting up the model with the appropriate options. The resulting model can be used for generating chat completions based on input prompts.

Usage

This node is typically used in workflows or applications that require natural language processing capabilities, particularly for chat-based interactions. It can be employed for tasks such as:

- Chatbots and conversational AI

- Text generation

- Language translation

- Question answering systems

- And other NLP tasks supported by Mistral AI’s models

Integration

The ChatMistralAI node can be integrated into larger systems that utilize various AI models and tools. It’s designed to work seamlessly with other components in a modular AI architecture.