Skip to main content

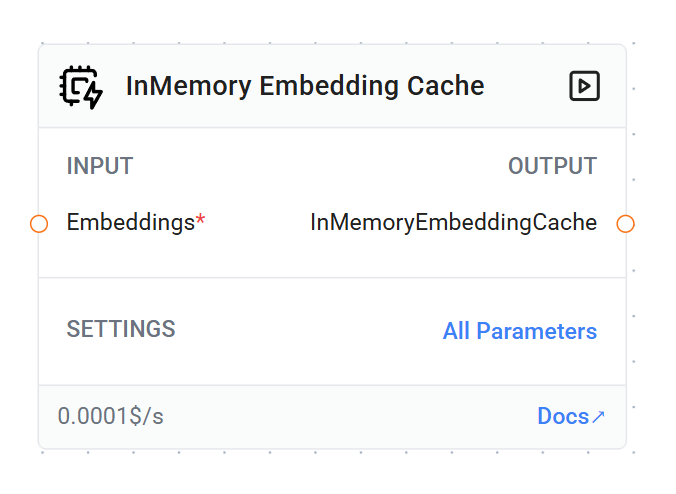

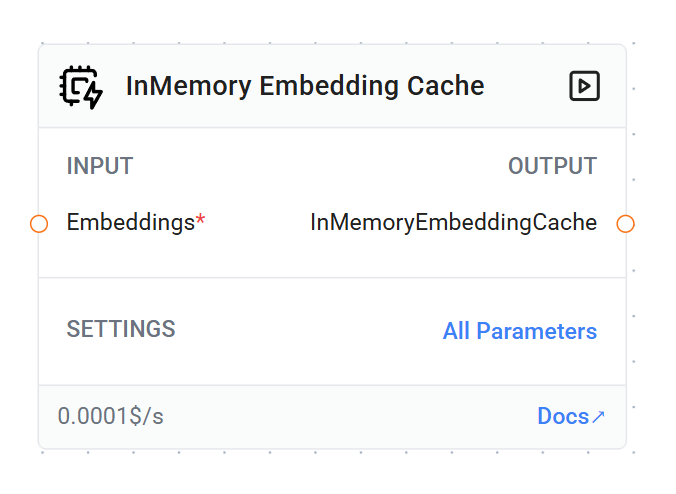

Node Details

- Name:

inMemoryEmbeddingCache

- Type:

InMemoryEmbeddingCache

- Category: [[Cache]]

- Version: 1.0

Description

This node implements an in-memory caching mechanism for embeddings. It stores computed embeddings in memory, allowing for quick retrieval of previously generated results. This can significantly reduce computation time and API calls for repeated or similar embedding requests within the same session.

Parameters

-

Embeddings (Required)

- Type: Embeddings

- Description: The embedding model to be used for generating embeddings.

-

Namespace (Optional)

- Type: string

- Description: A unique identifier for the cache, useful for organizing different caches.

- Additional Params: true

The node doesn’t require direct input from the user. It integrates into the embedding generation flow automatically.

Output

The node returns a CacheBackedEmbeddings object, which wraps the original embedding model with caching functionality.

How It Works

-

When an embedding request is made:

- The cache checks if an identical request has been processed before.

- If found, it returns the cached embedding immediately.

- If not found, the embedding is generated using the provided embedding model, stored in the cache, and then returned.

-

The cache uses a combination of the input text and namespace (if provided) to create unique cache keys.

-

The cache is maintained in memory for the duration of the application’s runtime.

-

The caching mechanism is implemented using the

CacheBackedEmbeddings class from LangChain, which provides a robust framework for caching embeddings.

Use Cases

- Improving response times for applications that frequently generate embeddings

- Reducing API costs by minimizing redundant embedding generation calls

- Enhancing performance in scenarios with repetitive text inputs or similar queries

- Optimizing vector search operations by caching frequently used embeddings

Special Features

- Efficient Caching: Uses an in-memory store for fast lookup and storage.

- Session-Based: Cache is maintained for the duration of the application session.

- Automatic Integration: Works seamlessly within the embedding generation flow.

- Namespace Support: Allows for organization of multiple caches within the same application.

- LangChain Integration: Built on top of LangChain’s

CacheBackedEmbeddings for reliability and consistency.

Notes

- The cache is cleared when the application restarts, ensuring fresh embeddings for new sessions.

- This caching mechanism is particularly useful for scenarios where the same or similar texts are likely to be embedded multiple times within a single session.

- While improving performance, it’s important to consider memory usage, especially for large numbers of unique embeddings.

- The effectiveness of the cache depends on the nature of the embedding requests and the likelihood of repetition within a session.

The InMemory Embedding Cache node provides a powerful way to optimize embedding-based applications. By reducing redundant computation and improving response times, it can significantly enhance both the performance and cost-effectiveness of systems that rely heavily on embeddings. This node is particularly valuable in applications where quick embedding generation is crucial and where similar texts are likely to be processed multiple times within the same session.